In this blog post, you will learn the importance of Inter-task Access Synchronization. We will discuss the race condition with its solution including the mutual exclusion and semaphore. So let’s start the Inter-task access synchronization blog post with some problems and their solutions.

The Target: Shared Resource

Shared Resource is a globally shared resource that is made available to multiple contexts. Examples of shared resources are global memory and peripheral devices. By global memory, I mean a piece of data whether it is a global variable or a global data structure. Also, global functions which are not thread-safe can be considered as global memory (often overlooked by programmers).

The Problem: Race Condition

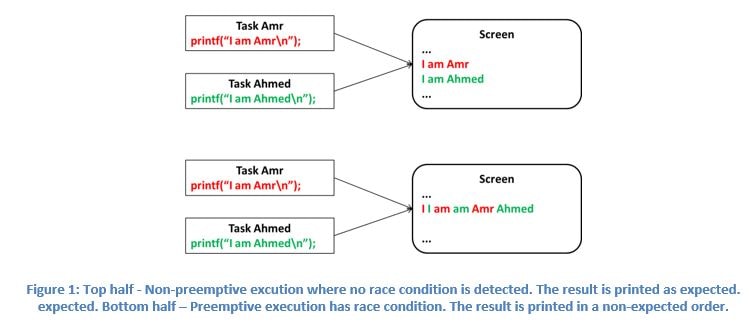

Race condition in software arises when the end result depends on the sequence of execution of the tasks. Its effect is serious when the execution sequence is not intended by programmers and results are not predicted.

In purely non-preemptive environment – where IO polling is employed – the problem does not exist.

Adding interrupts, introduced the problem. An ISR could manipulate a shared resource that is being currently accessed by interrupted tasks. Boom, data corruption!!

By analogy to ISRs, adding preemption makes the problem worse. Higher priority tasks – in addition to ISRs – could manipulate shared resources currently access by the preempted task.

The Solution: Mutual Exclusion

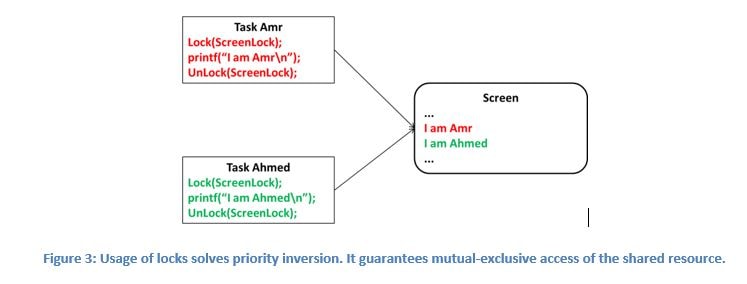

Race conditions could be avoided if the access to shared resources was atomic. No other context will preempt/interrupt the current context during the access. Unfortunately, the access is not atomic.

The other way to avoid race conditions is to ensure that shared resources are accessed exclusively. Regardless of preemption/interruption, one and only context is allowed access to the shared resource. This mechanism is referred to as Locking.

Different Solutions: Different Locks

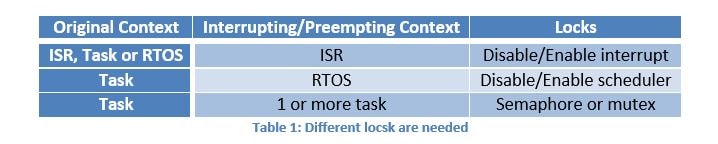

Locks differ according to the interrupting code. If you are writing a piece of code, you need to:

- Identify the nature of your code you are writing (task, RTOS or ISR)

- Identify shared resources in your code.

- Identify higher priority code that could interrupt/preempt (task, RTOS or ISR) your code and access shared resources identified in step 2.

- Identify proper locks and protect the shared resources.

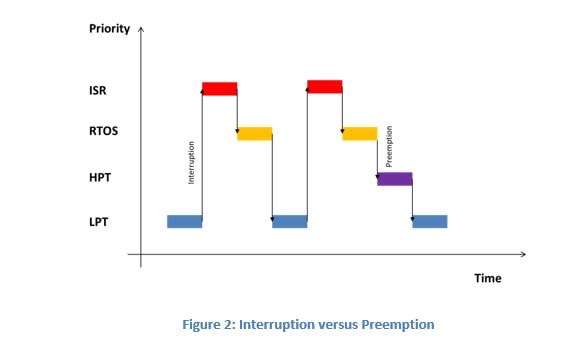

Referring to figure 2, an ISR could interrupt another ISR, the RTOS or a task. To protect your code from higher priority ISRs, a simple lock is used, disabling and enabling interrupts.

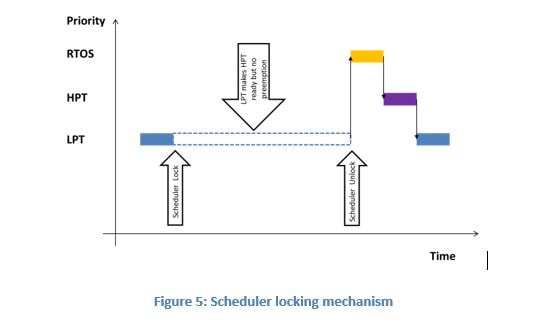

The RTOS controls the execution of tasks. If a task wants to avoid preemption while accessing shared resources, it should disable and enable the scheduler.

If the shared resource is shared among a group of tasks, different locks are used. These are the semaphore and the mutex (described later).

If you are a beginner and looking for a good Online Course on RTOS, it is my recommendation you can check “Introduction to Real-Time Operating System (RTOS)“. This course is prepared by Amr Ali exclusively for beginners. The course contains video lectures of 3-hour length with 2 downloadable resources covering all basic topics of RTOS.

Enroll In Course

Protection from Interrupts

Disabling and enabling interrupts are not simple as they appear.

One side effect of using this mechanism is the interrupt latency. During the access to the shared resources interrupts are disabled which adds to the interrupt latency. As a guideline, try to make your shared resources access as short as possible.

The code accessing the shared resources must not depend on the interrupt you are disabling. Otherwise, your code will crash.

Also, be aware that using this mechanism may change the interrupt state unintentionally. This situation may occur in reality if these locks are protected recursively without the knowledge of the programmer for example.

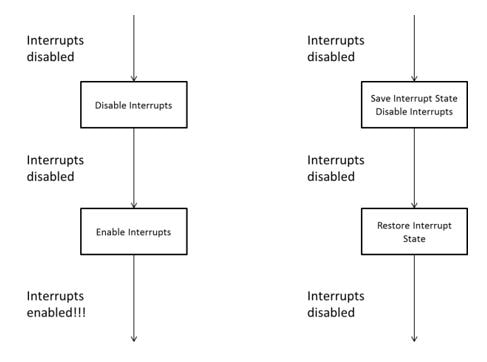

In most RTOSes, like uCOS or OSEK, they have a version that supports shared resource recursive protection. The lock operation, instead of disabling interrupts, stores the previous interrupt state then disables the interrupt. The unlock operation then restores the previous interrupt state whether it was enabled or disabled.

Figure 4: Left – Simple disable/enable interrupt lock may alter the previous interrupt state after the release of the shared resource. Right – A bettering protection from interrupt mechanism that preserves the previous interrupt state after the release of the shared resource.

Protection from the RTOS

During the access of the shared resource, scheduler is disabled. The preemptive kernel is temporarily non-preemptive.

The code accessing the shared resources must not depend on the scheduler you are disabling. Otherwise, your code will crash.

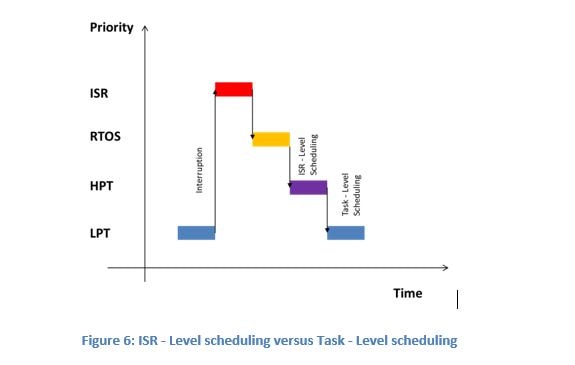

It is worth mentioning that protecting from interrupts does not necessitate protection from the RTOS and vice-versa. Scheduling points in RTOS can be classified into two categories: task-level scheduling and interrupt-level scheduling.

When you disable the scheduler both scheduling categories are disabled while interrupts can occur. Disabling the interrupts will disable the interrupt-level scheduling as the ISRs will not run. However, task-level scheduling is not impacted.

Protection from Tasks – I: Semaphore

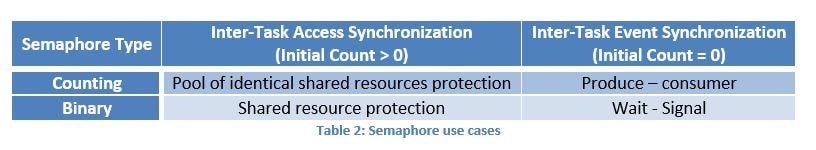

A semaphore is an unsigned counter. There are 2 types of semaphores. Counting semaphore can count from zero to max. The other type is binary semaphore. It can count from zero to 1.

Binary semaphores can be considered as a special type of counting semaphores. Some RTOSes only implement counting semaphores and leaves it the programmer to use them as binary semaphores.

The semaphore lock operation tries to decrement the semaphore count if greater than zero. A task that tries locking a zero value semaphore will block. This means that someone already has locked the lock and access to the shared resource protected by the semaphore is prohibited. Access will be granted semaphore count is increased by the unlock operation.

Semaphores have different use cases. Only two of them are related to the shared resource access problem. The other two use cases are related to using the semaphore as a flag rather than a lock (beyond the scope of this article).

Problems with Semaphores

Semaphores do solve race conditions among tasks. But, they have associated problems: starvation, deadlock, and priority inversion.

Starvation is a situation where a low-priority task is not granted access to the shared resources. Whenever this low-priority task tries to take the shared resource, it gets blocked as the shared resource is already taken by another high-priority task. One possible solution to starvation is proper design (selecting proper priorities or scheduling algorithms)

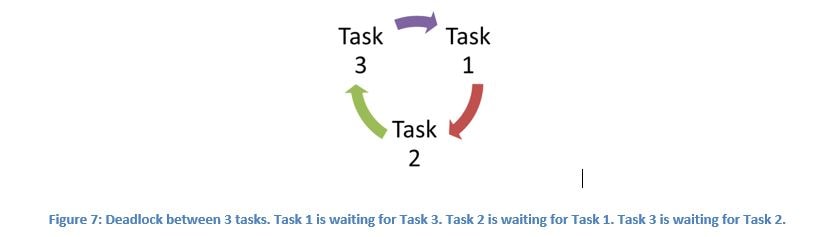

Deadlock is a situation where two or more tasks are waiting for each other resources. One possible solution to deadlock is proper design (ordered locking).

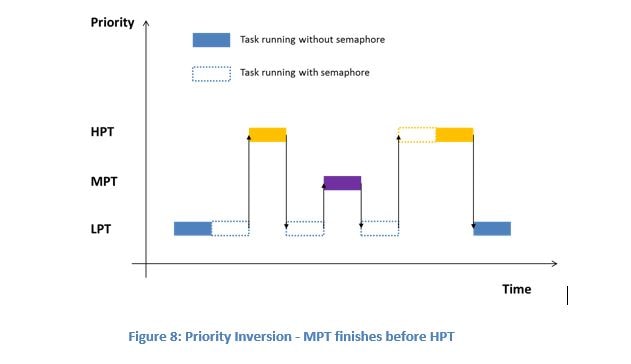

Priority inversion is a situation where a high-priority task is blocked on a low-priority task using a protected shared resource. During this blockage, a medium-priority task (that does not need the shared resource) can finish its work before the high-priority task.

Protection from Tasks – II: Mutex

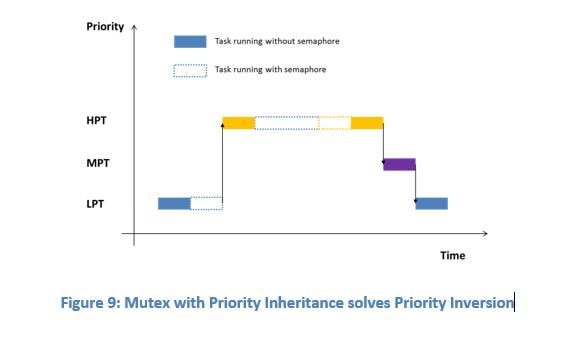

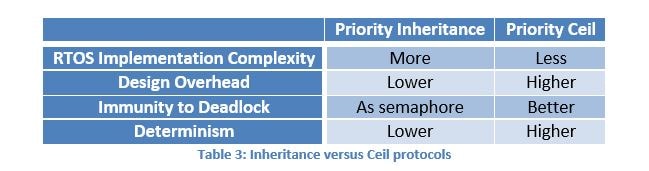

The solution to the semaphore priority inversion was the introduction of a mutex. Mutex is simply a binary semaphore that is used to protect a shared resource with an associated protocol. The main goal of the associated protocol is to solve the priority inversion problem. Two protocols are most common: priority inheritance and priority ceil.

In priority inheritance, a low-priority task is automatically assigned the priority of a higher priority task when it blocks on the mutex. The low-priority task is re-assigned its original priority when it releases the mutex.

In priority ceil, a low-priority task is assigned a ceil priority once it accesses the mutex. The ceil priority must be greater than or equal to the highest priority of the tasks using this particular mutex. The low-priority task is re-assigned its original priority when it releases the mutex. In other words, a low-priority task inherits the ceil priority once the mutex is locked.

Depending on the RTOS you are using, it may implement one or both of these protocols. If you are using a RTOS that implements both algorithms, the following comparison might be useful in selecting the proper protocol.

One final note, in some mutex implementation they support recursive locking. A task that is locking a mutex can lock it again.